Rendering Normals in Studio - 3Delight vs. Iray

EDIT: The nature of what I'm trying to accomplish has evolved since original posting, so I thought it best to rename the topic to something a little more indicative of what I'm doing.

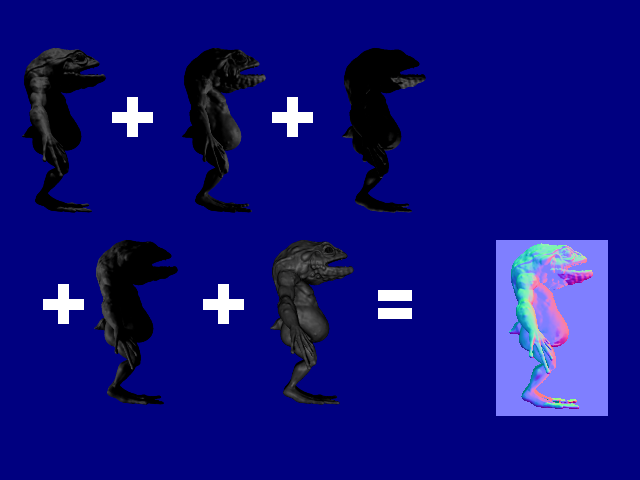

Short of it: I'm trying to create sprites with normal maps, and have been using the following method to do so up until now:

I've been sticking with 3Delight, largely because I understand lighting better, and renders are a lot quicker. I thought about switching to Iray and investing in a new graphics card, but only if I could reproduce similar output with the renderer. Very brief research and some Youtube tutorials give me the impression that I don't want to use a pitch-black background and a single directional light for the renders, because lowly-lit renders prolong render time. But is there any way in Iray, to just create a "light-only" render of an object, like those Amphibioid X's in the above example? Given that Iray materials seem to have exponentially more fields in the shading pane, I wouldn't know where to begin.

Comments

That might well be possible using Canvasses, in the Advanced tab of the Render Settings pane, but I'm not sure which combinations you'd want.

Knowing nothing about how canvases work...I noticed there's an option for Canvas Type to be "Normal." That could be what I need to address another question I had, which would eliminate the need to render five separate times with different directional lights. That'd be amazing - but I don't want to get my hopes up just yet. I'll be doing some reading up, or videoing up on Canvases.

There is one for "depth" or "distance", too. Those should produce a grey-scale image which you can use to create the normals you want... However, it will not be a 256-grey bitmap. It whould be a floating-point or ??? long-int 32/64 format, within the EXR file. (I honestly don't remember which format the EXR data format uses for that output canvas.)

In any event, the produced image format will have to be "converted" into grey-scale using a min/max setting for the upper and lower-bound limits of the 0-255 RGB values, which is the standard format for greyscale as a 256-pallet image. (That would be done in photoshop or another art program.) Which, you will then use to create your "normal map", from the grey-scale image. Unless you can find a way to skip the middle-man or the "normal" canvas output works for you.

https://www.daz3d.com/forums/discussion/109146/create-a-depthmap

Don't have Photoshop at the moment, so I've had to jump through some extra hoops to seeif these are working. I've had the most luck with the Normal Canvas, which does indeed deliver what it promises, albeit in a different coloring convention than what I'm familiar with. I can't quite figure out how to work around this, at least, this early in the morning.

Specular and Diffuse have given me less consistent results. In a few cases, I've seen the Diffuse canvas produce a solid white image of the object, but I've seen it come out differently based on what I'm rendering. It's probably a weird thing happening within the converter, that I have no control over at the moment. But, I also used a plane in the background of the scene for Test 3, that wasn't around in Test 2. Anyway, I'll show what I'm trying to achieve for Diffuse, and Normal in the attachments. I'll do a little more testing, but in the mean time, I have a few questions:

NOTE... You can render your animation as still-frames. (Single images)

Just make a unique pose at every key-frame and tell it to render frames 1-6 at 1-frame-per-second.

Poof, instant product output, no matter what the lighting is. (Put all your lighting into "groups", and just hide the unwanted groups on the first frame.) That is also a great way to capture a generic base-shadow too... for the animation.

No way to skip the EXR part. That is the standard, professional, format/container, used to hold the extended data that you just can't get with PNG or TIFF or JPG. GIMP is a free art program that can read the EXR files data, to create the "layers" you need to represent them. It can also create "normals" from grey-scale height-maps, or depth-maps.

https://www.gimp.org/

P.S. Gimp can now do 32-bit and 64-bit floating-point value editing, so EXR data will be no problem to use. 64-bit floating-point values are limited to specific functions and filters, but that shouldn't be a concern to you.

So after a lot of pressing buttons and observing what happened, I've run into a few issues.

Curious if anyone can offer insight onto any of these roadblocks.

Tangentially, I know that the LineRender9000 Fresnel Reflection v camera creates something like a normals render, albeit greyscaled. Don't know if I could use that as a makeshift depth map or not, though I'm more inclined to think not. It may very well just be applying a greyscale to a render similar to the Normal canvas.

Pure white (and pure black) are generally to be avoided. Noise means there isn't enough light falling on the surface to covnerge it in a reasoable time/number of samples.

The AoA Advanced lights are all for 3Delight, not Iray.

Did you try adjusting it - if using Photoshop there's a specific command for HDR tone mapping

Linerender9000 is also 3delight, using the Scripted renderer.

Hey Richard. I think I've seen your name in enough of my posts that I have to say, I really appreciate all the help you've given. I'm fairly certain my usage of Daz Studio is esoteric, so I'm glad to see a few people who can chime in and help with some of the more niche problems I run into. Now, onto the talking points:

The ultimate goal of the topic is to determine which renderer and method to use in my pipeline. It's an ongoing internal debate I've been having over the course of the year, and next year is when I shift focus from graphics to programming. 3Delight + LR9K is the most intuitive method as of now, but has its share of shortcomings. The biggest problem, being, I have to go into every object I render and replace materials with custom shaders that have their own lighting properties, when assembling a normal map--same goes for diffuse only and specular only renders. But if there were a way to skip over the five separate lighting preset renders, that would save a tremendous amount of time - enough to justify switching rendering engines, if need be. But if I could do it using 3Delight, that might be the best course.

By a Diffuse render you mean one that is flat colour? I think in PBR that would be albedo, though i'm not certain - and I don't know if Iray can do it.

As for normal renders - you want a normal map showing the direction, in world space, that the surfaces are facing? In principle you could use Shader Mixer - take the output normal from any normal/bump/displacement brick and feed it, possibly after trasnformation to a different space, into Colour input of the Surface Root shader. My attempts seemed to give a white out if I tried transforming the normal, I'm not sure if the results of just straight feeding it into the out put were right or not:

It's been awhile since I've stared puzzlingly at the post. It's my fault, for not learning how to build/read shaders up until now (And I still plan on doing that). But after a lot of trial and consideration, I think it's best that I stick with Scripted 3Delight for the project. For simplicity. I saw a lot of noise in my Iray test renders, and there seem to be more Render Settings parameters that deal with said noise in different ways than I can wrap my head around. My scene-only lighting always seemed to be dim, even when cranking up the intensity of the light source--could also be attributed to something that needs adjusted in Render Settings, but it's hard to keep track of all of that.

And I'd still be doing a lot of material/shader swapping, which was one of the things I was hoping I could avoid by switching to Iray.

I had a crazy thought just now--the kind that seems so absurdly simple and yet plausible, that you just have to try it anyway, despite decades of grim reality crushing your childlike enthusiastic spirit. I may not know how to write a shader, but I do know that at the end of the day, the direction of a pixel-sized normal is encoded into RGB values, and "blue faces you." ...be rught back!

edit: Turns out I can't recreate the lighting conditions necessary to make a scene that's color-coordinated to match the normal maps, though I learned a lot more about why the RGB values are what they are. Maybe it's time to look into shaders again.

Was reqading up on Sketchy, when I discovered it came with an Iray environment that does solid lighting in all directions, giving the flat color render I'd been looking for in Iray. My question transforms:

Hi, author of LineRender9000 here.

I think what you want is some Shader mixer cameras that isolate the variables you're looking for. It's true that the Fresnel reflected v camera will give a "normals"-esque output, but it converts it to greyscale so the outliner doesn't get confused by the multiple colors touching each other. In your case you could probably load up the Fresnel reflected v camera in the shader mixer and remove that greyscale conversion.

In any case, I would say shader mixer cameras in conjunction with LR9k AutoRender's multiple pass functionality is probably what you need to isolate the different "variables" in the output image. The drawback from what I'm gleaming from this chain is that you don't have shader cameras that output what you want. I know it's possible to ouput specular and diffuse passes in isolation using shader cameras... something I've been expanding upon in my own work, although I haven't developed shader cameras for those explicit purposes yet.

The reason why you're not finding Fresnel reflected v indicated in any of the supporting scripts is because that shading is done from a Shader camera. (If you load Fresnel reflected v camera, change that to be the active camera and render with the LineRender9000 script you'll see it's just a "regular" render using a shader dynamically applied to the whole scene.) The point of using shader cameras in LineRender9000 was so that there was no need to replace all the surfaces/materials in the scene to get different outputs.

I hope this is helpful.