Do we have a simple way of importing MoCap into Daz yet?

I'm looking to buy one of the Rokoko smartsuits for motion capture, but I can't find any end-to-end tutorials which show the captured animation being ported into Daz itself (so that it can be rendered in iRay)

Do we know of any modern solution which is relatively simple?

A member of Rokoko who wasn't very familiar with Daz was kind enough to offer the following suggestion (comment below), but I'm wondering if there's a less primitive pipeline?

Do we know of any modern solution which is relatively simple?

A member of Rokoko who wasn't very familiar with Daz was kind enough to offer the following suggestion (comment below), but I'm wondering if there's a less primitive pipeline?

Post edited by jamesramirez6734 on

Comments

"First, you have to make sure that your character skeleton in Daz Studio matches your exported character skeleton from Rokoko Studio. I do not know exactly what sorts of character skeletons are available in Daz Studio, but if either Mixamo or the Maya HumanIK skeletons are available. Those would work. If none of those skeletons are available, or you want to use a character with a different kind of skeleton. You would first need to retarget your mocap animation from an exported Rokoko Studio animation, onto your custom Daz Studio character."

"As far as I am aware, Daz Studio, unfortunately, does not currently have any functionality for retargeting animation. So you would need to do this in a different software. I would recommend using Blender as it is free and works well with Rokoko Studio." "To get started, follow this article to install the Rokoko Blender plugin: Blender - Download Links & Walkthrough If you do not currently have the option to stream your mocap animation data from Rokoko Studio to Blender, you can instead import the animation by first exporting it from Rokoko Studio, using the export tab. Then, import the animation into Blender by using File->Import->.fbx. You should now have your mocap animation inside Blender. You then export your character (or just your character skeleton) from your character in Daz Studio and import it into Blender. So you now have both the exported Rokoko animation and Daz character in Blender."

"When those are ready, you can then retarget the Rokoko Animation onto your Daz character by following this tutorial: Blender 1.1 Retargeting : Rokoko Help & Community When you have the animation retargeted onto your Daz character, export the Daz character animation as a .bvh and import it into Daz Studio by following the following tutorial. Be aware that since Daz Studio is outside our normal area of expertise, I have found a tutorial on how to do this from an external site, so the efficacy of the method described in the article may be subject to some inconsistency: Diffeomorphic: Importing an Animation Back into DAZ Studio"

"Hopefully, this should leave you with a fully animated character in Daz Studio."

Your best bet is getting Maya Indie. As much as I hate to admit it, nothing beats HIK.

I have used the Blender method for retargetted FBX from UE4, the feet still slide

Daz provides FREE plugins to send G8 figures to UE4,Unity and Blender

Nearly $2500 USD on a professional mocap suit which includes plugins and software to stream/record performance data to UE4 Unity and Blender.

The Rokkoko Rep has accurately described the state of things.

Check the DAZ Blender forum as one user @Benniewoodell May have had some success in Exporting G8 motion Data from Blender to Daz studio using the MHX converter from Diffeomorphic.

Not sure how/if the Rokkoko retargeter works with Diffeo imported G8 Figures however.

If you are not comfortble with Blender/Diffeo MHX

and truly need to use Daz studio instead of UE4 or unity for rendering then

your only other option is a third party Dedicated motion retargeting App to generate & export Daz G8 compatible BVH

and is also supported by the Rokoko suit capture export Software.

The only two are Autodesk MOBU & RL 3DX pipeline.

Also, I hired Alexander Goryun (the man is very, very good at Houdini and Daz as well) on upwork.com to develop a tool in Houdini, now that it's got KineFX. It works, sometimes, but is affected by a bug in the exporting that is yet to be fixed. When it is resolved, I'll release it because he made it dead simple to use, i.e. now "characterization" stage like in Autodesk products, it groks G8M/F, G3M/F and all the mocap sources I had access to, and it works very well. I haven't tried it, but it probably works with the free version of Houdini.

@wolf359 Now you know I am only slightly kidding when I say that Houdini can do anything :) But why anyone would want a "DS compatible" BVH is the real question. The Houdini tool Alex wrote writes json, and he provided a script to import the motion into DS because DS BVH is totally screwed up importing as well as exporting. I looked at it and literally all it does is applies the rotations to the corresponding bones and translations, if there are any, one by one. Why the BVH DS importer can't handle that is a mystery to me.

While the tool has a serious bug in it, or rather Alex is insistent that the bug is in Houdini and they'll get around to fixing it, sometimes it does work, it supports every mocap source I can think of, and for targets it supports G8M/F and G3M/F.

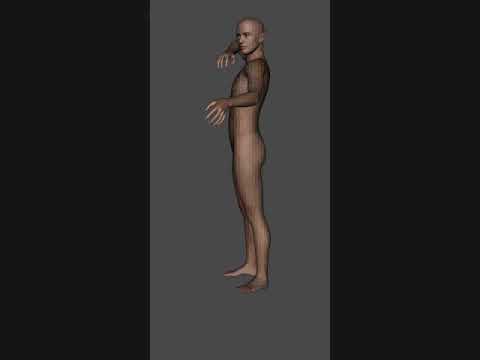

Check this out and compare it to the Wander take on Actorcore. It does an amazing job of preserving every nuance, and I didn't use a particularly high number of iterations in the solver.

I'll be happy to share this when it works better, and whoever is interested can help test all the sources and targets.

Interesting. I've found the BVH import to actually work pretty well, although I haven't done a whole lot of it.

@Gordig Well, I suppose the importer is better than the exporter, but it is just rather brittle, i.e. if the BVH is not perfectly the way DS expects it to be, the results are the stuff of nightmares. It makes no effort at all to figure out how to interpret the BVH, and so a BVH from systems like Perception Neuron are completely unusable. Exporting is a different story; It appears to sometimes switch axes depending on the number of frames exported: the results of exporting 1 frame somehow is not always equal to the first frame of a two frame export. I didn't investigate further, I just asked Alex to make it work with however a 1-frame BVH represents the armature.

I think that's much of the problem we experience trying to get DS and, say, Blender to interoperate: we do so using file formats that don't knwo anything about DS nor Blender, instead of specific formats that know about both. Alex's json to import the animation back is an example of the latter, and I think I'm going to write another plugin to do the analogous operation during export so it won't be necessary to use a general, app agnostic format like BVH at all. The intent is not to get any two apps, now and in the future, to interoperate, but to DS specifically to interoperate with a small set of other specific apps that exist now.

To be fair, all the BVH importing I've done so far was made with a G8 that I hacked into Mixamo, so no real retargeting was necessary.

Them the tool will probably be of interest to you, because Mixamo is supported. You just load up the single frame BVH of your character in rest pose, load up the FBX from Mixamo, and away you go. I asked Alex for some specific wording to ask at SideFX when we can expect a fix, because it'll be awesome when it works 100%. No more Autodesk, no more creating characterizations, just two files and a click.

your script works with Houdini indie

( $269 per year rental)..yes??

I would surprised if it works with the free "appentice" version considering how crippled the export options are but you sould get confirmation

as such clarification will be relevant to budget minded Daz studio genesis users who plan to render

everything in IRay or 3DL after harvesting mocap in

some version of Houdini.

Yes, it works with Indie, for sure. I'm still harboring hope that it might work with Apprentice because while the hair retargeter didn't, that was because it was trying to output Alembic. The retargeter may work because it's just writing json and it'd be much more of a jerk move for them to disable that in Apprentice. Not that companies can't be jerks of that magnitude, but I do believe SideFX is better than, say, Autodesk in that regard.

Anybody have Apprentice that wants to try it? With the understanding that it doesn't yet work in all circumstances?

I'll give it a shot.

OK, on Linux, I just have to put this file in ~/houdini18.5/otls

I'm not sure what ~/ translates to on Windows, which I assume you run, probably My Documents or AppData.

Here it is. I really hope this works...

Apparently I only have 18.0 installed. Do you suspect that will be a problem?

edit: nevermind, my 18.0 installation was screwy, so I installed 18.5. Proceeding.

Do a BVH import and apply to a Genesis figure as it has far fewer bones, you can later move the animation up to other Figures. During BVH import the first time you will want to create and save a bone map so that the imported bone names match with DAZ bone names.

Okay, found one of my old posts here, and below: https://www.daz3d.com/forums/discussion/comment/5703441/#Comment_5703441

For mocap from suits like Rokoko or Notiom's Perception Neuron suit the number of bones/sensors is small compared to the number of bones in Gen3/8, so best to start your BVH import with G1F. You only have to map the bones from the Mocap software to DAZ once. The bone mapping is the only real trick with getting the data into DS from these mocap suits. I have the kickstarter version of the Perception Neuron suit. You can download sample files from their website. Here is the bone mapping to get it into DS:

The following are the 7 bones that I have found that need to be remapped:

a. SPINE 3 -----> CHEST

b. LEFT SHOULDER -----> LEFT COLLAR

c. LEFT ARM -----> LEFT SHOULDER

d. LEFT FOREARM -----> LEFT FOREARM

e. RIGHT SHOULDER ----> RIGHT COLLAR

f. RIGHT ARM -----> RIGHT SHOULDER

g. RIGHT FOREARM -----> RIGHT FOREARM

Once your got G1 animation then pretty easy to go to G2, G3 & G8. Here is link to thread==> https://www.daz3d.com/forums/discussion/250726/so-if-an-animation-is-saved-using-genesis-it-works-on-genesis-3/p1

For more on Perception Neuron import to DS see my post here: https://www.daz3d.com/forums/discussion/61827/motion-capture-perception-neuron-daz-import

Got it loaded up in 18.5 Apprentice, but everything is greyed out. I have yet to figure out where the file needs to be located in order for Houdini to see it, so I just opened it from the file browser. I don't know if that's relevant or not.

I'll ask Alex where it goes on a Windows system.

He said the hda goes in C:/Users/xxx/Documents/houdini##/otls

I didn't have that folder, so I dropped it in the otls folder in asset_store. Created an otls folder in the main directory, and it's working now (or at least more so than before).

It works, although a weird thing happens: the original BVH was exported at 30FPS, the Mixamo animation was 30FPS, a prompt pops up asking me if I want to continue exporting the JSON at 30FPS, but when I import it into DS, it applies four keyframes for every five frames.

I'm no wizard with any of the 3D software I use, but I'm very much a novice with Houdini, and I don't see where to set the FPS within Houdini. Could that be the problem?

edit: nevermind, found it. Re-exporting.

re-edit: and it works as expected now.

Maybe upload a short BVH and I will see if I can figure out the DAZ bone mapping. Might be much easier solution.

I installed Rokoko Studio and exported the BowAndArrow sample mocap file to BVH using the 3ds Max Biped skeleton (tried the others and they didn't work),

In DS create new scene, load G1M and select it in Scene Tab. Drag the BVH file into the scene, use defaults for Importer Options.

Bones seem to map pretty good except for hands. The hands come in with a Bend that I could not fix via the Importer. Fixed hands by deleting Left and Right Hand Bend keys and then adding new Bend keys with zero bend, at the start and end of the animation.

Here is link to video without Hand Bends adjusted:

Here is link to video with Hand Bends fixed:

@FirePro9

There's a good reason why we're interested in a bonafide retargeter. Just mapping the bones is a technique that I think we've all known about for a long time, and in the vast majority of cases it just doesn't work well enough for production, for example if you have two characters that need to interact, or if your target armature is different from the source.

The tool already could not be any easier, and it already knows about all G8 and G3 target armatures, as well as Perception Neuron, Mixamo, iPiSoft, ActorCore, XSens, and Rokoko Motion for the sources, so while there are additional steps, none can be described as "hard".

I'd suggest for you to try the tool as well; When you see the results (assuming you don't hit the known bug), I think you might ultimately agree with the above.

@Gordig

Awesome! So, in your opinion, it "works" with Apprentice?

It has nothing to do with Apprentice vs. Indie, but did you encounter any wierd rotations/translations? Did your source animation have any more extreme rotations? I haven't been able to characterize what provokes the bug.