[SOLVED/I bought the laptop - read for benchmarks] Laptop RTX3080Ti GA103S chip - any users?

mwasielewski1990

Posts: 343

mwasielewski1990

Posts: 343

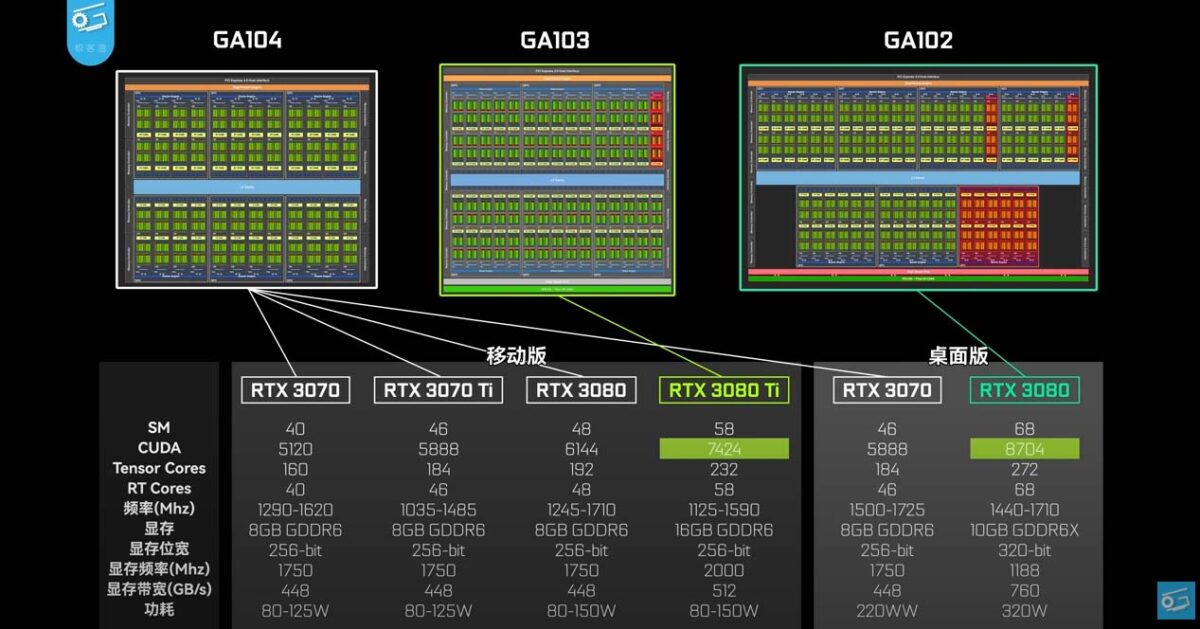

So... just about a month/two months ago Nvidia introduced a refreshed 3080 for laptops, this time a TI version with 16GB of VRAM and over 7000 Cuda cores. The maxed-out version of this GA103S chip is able to pull 150W of power, with boost up to 175W. With this kind of specs, I got myself thinking... Is this the time to become a digital nomad?

I'm a desktop RTX3090 user. My main concern is the actual performance of the maxed-out version. On paper (calculating cuda cores to power draw ratio, clocks, etc) this card theoretically matches a Desktop 3070/3070ti. But that's the point - theoretically. Does anybody have a laptop with this GPU chip?

According to my calculations, the laptop 3080Ti should be exactly 2 times slower in Iray as compared to a 3090. This is still amazing if it's true... It's like a dual 1080TI, or a single 2080TI... in a laptop

I think the only ones on the market right now with the maximum power draw (175W) are Alienware x15 R2 and x17 R2 laptops, and MSI Raider GE76 (2022 version which is coming very soon). Yes, I know they're 4K USD each

So.. any experiences yet? Or should I be the digital nomad pionieer and pull the trigger on the Alienware laptop?

Comments

I can kinda give you some feedback. I'm a 3090 in my main desktop machine, but decided to look for a laptop that I could take with me on business trips to do rendering. I couldn't find a 3080ti that was available that didn't have issues, so I grabbed a maingear with a 3080 (16gb version). It's actually quite powerful, and at least for 3d rendering, I'd put it ahead of the Titan rtx I used to own (it's more cores than a Titan RTX). If I'm satisfied with a 3080 laptop, I wIould imagine the 3080ti would do you more than fine.

I should also point that while I had originally bought this with the intention just to use it on the road for long business trips, I've actually found it zippy enough and powerful enough to lay in bed or on the couch and work on renders instead of sitting at the computer desk. It's not as powerful as the 3090, but definitely way more than I thought it would be.

Cool! would you care to perform the benchmark scene that is provided somewhere on this forum so that we could actually measure how fast your gpu is? The last year's 16GB 3080 is only a tiny bit slower than the 3080ti, so this should be a good starting point. Also, what's the power limit on your card, is it fully unlocked? (as far as I remember, the GPU you have has a max power draw of 150W with a boost to 165W, so only 10W less than 3080ti.)

Benchmark is gonna be a bit because I'm about to leave for one of said business trips. Here's the gpu-z screen for the nvidia 3080 while rendering.

The Alienware laptop's seem to be well reviwed, and have good cooling systems. I'm trying to psych myself up to buy one with a 3080ti, but at nearly $4k when done configuring, I'm having a hard time pulling the trigger...

Well, the price is a subjective matter, but yes, $4K is "a bit" for a laptop Still as a desktop replacement you can just grab and travel with it anywhere, it seems reasonable.

Still as a desktop replacement you can just grab and travel with it anywhere, it seems reasonable.

The MSI pre-production units also look promising (there are reviews on YT), but we still don't know the details like color accuracy of the screen, etc. For me, thermals are the most important thing because if you buy a laptop with a 175W GPU, the last thing you want for this thing is to throttle. As far as I've seen, the Alienware X17 is the same cooling design as last year, so it handles the GPU all right, but it stumbles into some throttling problems on the CPU, because the 12700H and 12900HK run insanely hot when utilizing all 14 cores (check the gaming benchmarks, the "efficient cores" hit over 100c in Cyberpunk and throttle). This did not happen with the previous model, which only had 8 cores (11800H).

Also, I did some research and apparently this year a new Lenovo Legion 7 is coming also with 3080Ti, and the new Ryzen 6xxx series CPU's. I think it would be wise to wait and see how this situation develops? AMD's tend to be on the cooler side as far as I can remember...

If you could take a look at this:

https://www.daz3d.com/forums/discussion/341041/daz-studio-iray-rendering-hardware-benchmarking/p1

In your spare time, please, perform the benchmark, it would mean a lot to all people wanting to buy a laptop with the 16GB 3080 or 3080ti :)

Okay people, I bought the Alienware x17 R1, with the slightly older mobile 3080 GPU (GA104 chip with unlocked all CUDA cores, TGPMAX=165w, 16GB vram), and here are my thoughts after running the above mentioned benchmark scene:

This gpu is a monster! The bench scene was completed in 2 minutes, 53 seconds (total time, so counting without scene loading time to VRAM possibly a couple seconds faster).

It gives us a total of 173 seconds.

Desktop RTX 3090 does the scene in 101 seconds, so according to my calculations, this laptop GPU has 59% the performance of a desktop 3090! Woah! It has beaten a desktop 2080ti by ~10%, and is on par with a desktop 3070.

There is one small detail though:

While the GPU actually can draw the whole 165W during gaming (CP2077 tested), it only draws a max of 145-147W during renders. Perhaps this is because the BASE tgp of this chip is 150W, so DAZ can't really utilize the additional "Nvidia Boost 2.0" power, used by gaming. So while rendering we're 15W short, but still... Having something faster than a 2080TI in a laptop is just mind-blowing.

Also a note on the Alienware itself - it's not without flaws. The official app for controlling thermals and power levels could be more intuitive. There also is a weird keyboard problem - it randomly turns off and is unresponsive. This can be solved by uninstalling one of the built-in apps which is non-essential. Apart from that, the thermals of this design is awesome, GPU sits at ~67-70c during renders, while TMAX=87c. So we have enough headroom for situations like high ambien temps, etc. CPU during gaming spiked to a max of 92c (but for a split-second, literally), averaging in about 85c.

So... I can only imagine what the next generation of nvidia gpu's will bring. A desktop 3090 in a laptop 1-2 years from now? I think it's quite possible.

I couldn't find your benchmark in the thread but assuming you're running the latest version of Daz? If so I believe your system has more speed left in it yet as I have the 3070 laptop (150W) and running Daz 4.15 it did 2 minutes 46 total time. 4.20 has sapped speed from what I can see (I haven't upgraded from 4.16) and if Daz fixes that your render times should be faster still.

Not many laptop benchmarks here so good to see another one.

I have not yet contributed my benchmark but yes, I tested it on DAZ 4.20. I can also confirm DAZ 4.15 is faster than 4.20 (I migrated a few days ago to latest DAZ build on my RTX3090 PC, it also slowed down a bit). However I don't believe it's DAZ's fault, perhaps the new Iray renderer version dwarfed the render speed?

Can I ask about the TGP of your laptop's 3070 - is this 150W with, or without the boost? My laptop is theoretically capable of 165W power draw (base TGP being 150W, so +15W boost), but Iray maxed out on 154W when I let it run for ~2 hours of continous render.

Firstly a correction, my laptop is 125W base with 140W max power. I also render close to the base with the max power used being 126W.

I wonder if that is because of heat causing the system to limit the use of the max power mode? They've just released the water cooled laptops (and they are on the shelves at the shop I bought this from). They are getting higher power consumption when the water cooing is being used:

Power numbers are at about 3.30 on that video.

That's not the case. My GPU tops at 155W, being nowhere near the throttle limit of 87c. (it tops at ~75c). I'm still 10W short :D

Yeah, I think if you're hitting max power on other benchmarks but not when rendering it could be something else. I'm also sitting around baseline watts when rendering and my GPU temp tops out at 73c even on long renders.

The CPU on the other hand thermal throttles quite quickly, have you checked your CPU temps when rendering to see if your system does that too?

I'm not using the CPU during render, but even if I were - no, the Alienware laptop I have has 4 fans, It keeps the CPU around a peak of 88c at max load (while TMAX=100c). Thermals are not the issue surely. Also, I tried rendering with CPU+GPU, but it somehow dwarfed the GPU to 70% usage... Ditching the CPU solved this problem.

I have a theory: topping at baseline wattage might be a driver-specific issue, where the driver does not allow for the "Nvidia Boost" to kick in specific productivity apps (?). I'm able to reach the max of 165W exclusively in games. But, in order to confirm this theory, we would need to check it using some other renderer, maybe Cycles from Blender?

You should be using some CPU during rendering, even with GPU-only enabled my desktop sits at 30% CPU usage & my laptop sits at 25% when rendering because Daz still is still using the CPU for some functions. I have a momentary spike to 100% at the start of every render and if it stays there (and is slow), I know its fallen back to CPU.

Looking at overall temp numbers the laptop temps seemed alright and CPU was maxing around 88-91 degrees C. Another member suggested running HWinfo and then I saw that although the GPU is fine, the CPU would throttle individual cores during rendering, even though it was only using 25%. Were your temp numbers taken using HWinfo? If not, I would be interested to hear if you experience the same.

Doesn't happen on the desktop, those temps are well under what the laptop gets.

I'll have to try a game on the laptop to see if it uses the full 140W.

Iray rendering indeed uses less energy than a highly demanding game does. So I would not be concerned by those wattage numbers.

The 100% spike on the beginning of the render is the CPU being an intermediary while loading the assets into RAM, or gpu's VRAM (dunno which). Honestly, I wasn't monitoring individual cores, but throttling isn't a big problem in my opinion if you're not gaming... 100c on a single core + throttle is normal for all 11 and 12-gen Intel chips, they're designed to do that. 100c, by Intel's spec, is still safe by the way. Your laptop might have a custom peak temp offset (my Alienware has 95c), so throttle threshold will vary from laptop to laptop.

You can also download Intel Extreme Tuning Utility and play with your H or HK series laptop chips (despite what Intel website claims, some laptop 11800H's ARE unlocked, to my shock). You can try to undervolt your chip, or try to limit it's P1 and P2 turbo power limits accordingly to your preference. I left it on default, as most games I play don't throttle the 11800H (only Cyberpunk does it in a couple locations, but very rarely). For non-gaming situations, I don't care really