RTX 4000 Officially Revealed

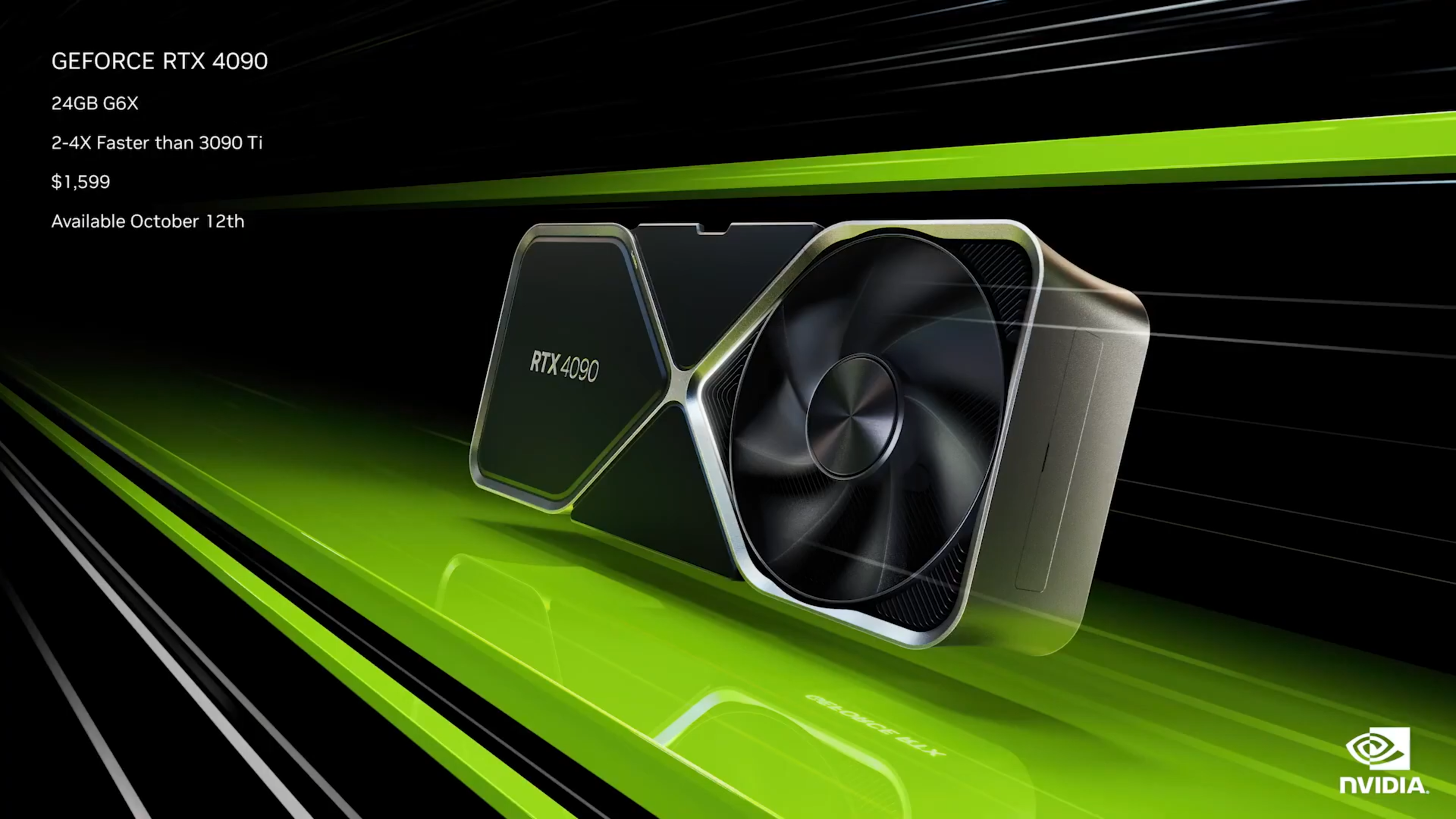

4090 24GB $1599 Launches October 12

4080 16GB $1199 Launches in November

4080 12GB $899 Launches in November

The 4080 12GB looks more like a "4070" than a 4080, as it also has a tier less performance. This post will be updated with more specs as they drop.

| Model | GeForce RTX 4090 |

GeForce RTX 4080 (16GB) |

|

|

GPU Engine Specs: |

|||

| NVIDIA CUDA® Cores | 16384 | 9728 | 7680 |

| Boost Clock (GHz) | 2.52 | 2.51 | 2.61 |

| Base Clock (GHz) | 2.23 | 2.21 | 2.31 |

| Memory Specs: | |||

| Standard Memory Config | 24 GB GDDR6X | 16 GB GDDR6X | 12 GB GDDR6X |

| Memory Interface Width | 384-bit | 256-bit | 192-bit |

| Power Draw | 450 Watts | 320 Watts | 285 Watts |

Link to Nvidia's site:

https://www.nvidia.com/en-us/geforce/graphics-cards/40-series/

Professional series RTX 6000 link:

https://www.nvidia.com/en-us/design-visualization/rtx-6000/

WARNING: There is no guarantee that the new cards will work with Daz Studio Iray on launch day. As of this edit on 9/21 the Iray Dev Blog has not posted any update about whether they have Lovelace (That is the code name) working with Iray yet. Additionally, even after the Iray team releases a new SDK, it still takes time for Daz Studio to impliment this new SDK for users. So what I am saying is to just be aware that if you buy Lovelace on launch it might not work, and if you do not have a backup GPU that can run Iray you may be stuck until Daz gets the update. It is certainly possible that Daz Studio Iray supports Lovelace on day 1, but past history has shown this not to be the case most of the time.

Comments

That's a huge power draw. And I thought my 1100 watt power supply was overkill. That'll be the norm pretty soon. It'll be like running a hair dryer for hours.

Wow, the 80/90 jump looks huge in the specs-wonder how the actual benchmarks will play out.

No NVLink...

Is that official? I was wondering what was under the cover on the end of the 4090 card render.

I'll wait for real world reviews of performance, power use and if they'll run ok on PCIe 3 x 16 but I'm thinking I'll be skippiing this gen.

It is clear they want to differentiate the x90 from the x80 more than the 3080-3090 were. The 3090 was largely a Titan with Titan features stripped out. But now that is out of the way, the x90 is going to be the new top tier class. They have basically moved all the names down if you think about it. It used to be x80 was the top card with previous x90 models actually being dual chip GPUs (remember those?!) Then came the first Titan during the Kepler era, as well as the first x80ti model with the 780ti. Now we have x90 as the top naming scheme, with room for a x90ti on top of that, LOL. The 4080 12GB is very weird, as this is basically what the 4070 SHOULD be, but for some reason they decided to call it a 4080 12GB. I find this very silly, and a bad move by Nvidia. I think everybody is going to spot the goofiness of the 4080 12GB. IMO it is not a good look. What does that mean for the actual 4070 when it eventually releases?

At any rate, the new professional cards are also in the works. The current formerly Quadro series is called the A series, the new series will be comically called the L series. I am surprised that Nvidia actually went with that given how "L" can be connected to "Loser". But whatever, the L series will of course cost similar to the A series, so not many of you will be interested in them. Especially now that the 4080 has a 16GB model. We can finally break past the 12GB standards we have seen for so long.

Some other observations. The stream talked a lot about to new Ray Tracing technology. So I have to wonder how that translates to Iray. Historically Iray has benefitted the most compared to video games in these performance uplifts. Nvidia is touting 2 to 4 times "faster than the 3090ti" for the 4090 and even the 4080. We might just see the high side of that uplift for Iray. But of course, that is just my speculation, and until we get some benchmarks you cannot be certain what the real performance will be. I believe 2x will be the baseline at minimum right now.

But also, that power draw. If you have been watching the rumor/leak scene you already saw that coming. It wasn't the 600 Watt insanity some thought it would be, but 450 Watts for the 4090 is a still a lot (and just to gloat, exactly what I always said it would be when people were trying to say it would use 600 or even 800 Watts, LOL.) Though I didn't think Nvidia would be silly enough to call the the 7680 core model a freakin 4080. I did get that wrong. Oh well. Anyway, you guys to need to look up UNDERVOLTING if you are concerned about power. Igor's Lab took the 450 Watt 3090ti all the way down to 300 Watts and only lost about 10% performance while saving 150 Watts. I fully expect that to be the case with the 4090 as well. If you can replicate that on the 4090, then you can run around 300 Watts or so and still massively beat the 3090 by almost 2x. This does raise the question of why Nvidia would overclock so much, but I am not Jenson Huang. At any rate, I would not stress too much over the power draw because of this. Undervolting is not hard, anyone can do it. It is not dangerous, you will not burn down your house or break your PC. If anything, you can save energy and also extend the longevity of your GPU.

https://www.nvidia.com/en-gb/geforce/graphics-cards/40-series/rtx-4090/

At the end of page, there is a button for "View Full Specs"

Yes, that is correct. Nvlink is sadly dead at this point. This does give the 3090 one advantage, since you can Nvlink them.

PCIe doesn't effect Iray at all in any previous testing. When you think about it, you can understand why. The data for Iray remains in the GPU VRAM the entire render. It is not constantly swapping data in and out like other software or video games. Some data exchanges, certainly, but not at the levels of other software. So PCIe bandwidth has never been an issue for Iray. The only thing it might effect is perhaps the initial loading of a scene, as the PC sends it to the GPU to render. But this this only when starting a render, and will barely make any difference, if it makes any difference at all. When I load a scene to my 3060, which has half the bandwidth of the 3090, I don't see any difference in loading times when starting a scene.

Considering that the width of the 4090 is supposedly going to take up 4 slots, would be lucky to fit 2 onto a standard motherboard and some cases. And really NVLink was only really ever useful if you were using the professional grade Quadro cards that made full use of the feature.

When I bought the 2070 Super, the possibility to add another and connect them with NVLink to 'double the VRAM', was one of the reasons for buying that card.

Although, when I was about to buy the second card, the mining boom had made it impossible to buy any GPU's

Those 4090 specs look nice on paper. will be interesting to see how it really performs

Got it. Thanks for the pointer.

Makes sense, but I'm one of those degenerates that would also try gaming on one.

They're quoting 850 min for the PSU. That seems - hopeful - unless they've got the power spikes under control. Also an FE card plus a waterblock would be north of £1800 UKP. That's a lot of cakes.

Even there PCIe doesn't have much impact. The 6500xt has a bad bottleneck in very specific situations on PCIe3.0, but that is a very special case. That card only has x4 and this is combined with a pitiful 4GB of VRAM. The card only has problems when it needs to start swapping VRAM. If the card simply had 8GB of VRAM it would not have that issue. So you have to saturate both VRAM and need lots of bandwidth at the same time...on card that packs 24GB.

You are not going to use up 24GB unless you start gaming at 8K for some insane reason. Additionally it will still run at x16 and so will have access to quite a lot of bandwidth, it isn't being choked to death like the sad x4 on the 6500xt. The 3090 breaks past 1TB of bandwidth as it is, and I don't think any game uses it.

If you are on PCIe 3.0, then I also must assume you have an older CPU. That CPU is likely to be your bottleneck before the PCIe factors in.

There is always a bottleneck. A CPU could be faster. The drives could be faster. The RAM could be faster. All of these have some impact on gaming performance. I imagine we will see lots of people doing tests with PCIe 3.0 and Lovelace given that 3.0 is still popular. But I don't think it will be much of an issue. If it actually is any kind of bottleneck...I am certain it is a very small one.

As for the power draw, the same undervolting strategy applies. You might drop a very small amount of performance, but in turn drop 100 Watts very easily. You don't have to drop to 300 Watts like Igor did. You can go between and try to hit the 3090 level of 350 Watts. At that level you will likely only lose a tiny amount of gaming performance, to the point where you just don't care. This card is doubling the 3090 here. Is there any game the 3090 can't play at 60 fps 4k? You can double that now to 120. Don't forget the 4000 series is getting an improved DLSS 3.0 to drive fps even higher. Even if you lose a little performance from undervolting, it will still destroy every game you have for the next couple years. And if you really are into the high refresh rates, I would assume you would be looking at new build soon regardless. You are going to want Ryzen 7000 or maybe next gen 8000 at some point to drive high refresh rates no matter what GPU you buy.

But I stress to those who only use Daz and content creation apps, you don't need to worry about anything in this post, LOL. You don't need balance for Daz Iray.

@outrider42,

Don't forget the RTX 6000 (not to be confused with the Quadro RTX 6000 of yore) coming in December...

With a max TDP of only 300 watts.

ETA: It's also a PICE gen 4 card, meaning that the rest of the 40 series line will also be gen 4 (not 5) based. Food for thought.

I touched on that briefly. Since the only advantage the formerly Quadro line offers to Iray users is VRAM, I don't see the lineup really drawing many Iray users just for the 48GB. While it does use only 300 Watts, again I stress the value of undervolting. Igor undervolted the 450 Watt beast 3090ti down to 300 Watts and it ran fantastic, only losing 10% of performance in the worst cases. The former Quadro line is always slower than their gaming counterparts, and that performance lines up with how the A6000 would perform.

So if you undervolt the 4090 down to 300 Watts...the only thing the RTX 6000 offers is VRAM, and is going to cost WAY more. The A6000 cost $5000, so the RTX 6000 should be about the same or even more. Undervolt the 4090 and save over $3000. You would be basically paying $3000 for double VRAM. Sadly the 4090 has no Nvlink, but you could Nvlink 3090s and get a 48GB pool of VRAM.

The RTX 6000 only makes sense if you really need the professional features it has that gaming cards lack. Man that is an awful name. The strange thing is Jenson Huang actually said "L" in the presentation. I guess they decided to drop the L for the reason I mentioned. But they still could call it any number of other things. This is a very confusing name, how are people even going to search for it?

Perhaps the rumored Lovelace Titan will change that equation. But that is only if Nvidia releases it.

Also, links added to the first post.

Worst case for a 3090 between PCIe 3 and 4 is about 2%. For my uses, rendering, VR and 4k gaming the 3090 is the limiting factor. That's with a 10700K with MCE enabled, 3200 ram, SN750, +100/500 on the GPU and a custom loop with 420 & 280 rads. A 4090 may change the dynamics. Have to wait and see. I find the 2x performance claim for rasterised games believable. I'm looking forward to rendering benchmarks, if it follows the pattern from 20 series to 30 series it should be impressive.

Honest question, what am I missing?

When my scene fits in (3080) VRAM the renders are pretty quick, say a max of 15 minutes. What I want are those 24Gb of VRAM for bigger scenes. Why not go with a discount 3090? Will the performance (spec) increase of the 4090 over the 3090 be so much faster that it justifies the purchase? Is it because I'm just rendering stills, will I need those extra FLOPS for animation? And I don't play any serious games.. So, why a 4090 over 3090 when they both have the same amount of VRAM?

Sounds like you already have your answer then. The 4090 is still PCIe 4.0, and its VRAM is not much faster than the 3090. All the PCIe comparisons I have seen have required crazy high frame rates to even begin to see performance drops for 4.0 versus 3.0. These rates are higher than what most 4K monitors can even handle, since most max out at 144 hz. Samsung only just released the first 240 hz 4K display this year. But I would rather have an OLED myself, and most of those currently cap at 120 hz, except for QD-OLED that caps at 165 I think. The 4090 should have no problem hitting that cap in most games for the next couple years.

The one that sticks out to me is the Hardware Unboxed video that showed cheap DDR5 running Spiderman with ray tracing 15% faster than DDR4 at 1080p. I wonder if that is a sign of what may be coming.

It is all about speed. While the 3090 can certainly render some things in 15 minutes, there are scenes that can still take even a 3090 hours to render. A scene that can fill up 24GB of data may thus be complicated enough to take longer to render. Not always, but a larger scene can take a fair bit longer.

For the people who use their GPU to make money, every render can be a paycheck. If you can double the number of renders you make, well, why wouldn't you want that?

For those who are mostly hobbyists I can understand why it sounds absurd. But for some people this card can make them more money than a 3090 can. And for others, they say time is money. For the people who have the money to spend, halving render times may be worth that to them. I can wash my car, but I can also spend $7 to go through a quick auto wash that is done in literally 5 minutes. The convience is just too good to pass up. The 4090 is about convenience.

That said, as 3090s drop ever more, they can be compelling. Since the 4070, *cough* 4080 12GB wound up being $900, I am not sure how the 3090 will fair price wise. The 3090 is already selling under the level of this card. Perhaps if a 4070 comes out in the future for $600 and still beats a 3090 it may cause the 3090 to bottom out more. And of course miners are dumping cards like crazy now that Ether is finally proof of work. As the owner of a 3090 myself, I am tempted to just buy a cheap 2nd 3090 and double my performance that way. It would make some sense, plus I could even use Nvlink which the 4090 lacks.

Thank you for this. I forgot about Nvlink (lets hope NVIDIA doesn't) and a pair of 3090's as the price comes down sounds.. interesting. So, I have options and (as a hobiest) my initial thought of scarfing up a sale price 3090 isn't horrible. I'm good for now and 10GB of VRAM is nothing to scoff at; but man I hit that limit way too often.. for now that's the only reason I'd upgrade my GPU. Thanks again for your explaination!

I was thinking the same. I have a 3090 and I was wondering if I can fit another on my Motherboard (Gigabyte Aorus B550 Elite AX) or if my 850W PSU will handle another 3090. I also wonder about the power consumption of two of those beasts.

Great thread! A lot to chew on....

Yeah would of been good, just a bit of a bummer about the mining boom coming when it did, I had the same problem when I got my RTX3060 I bought it at the wrong time. On NVLink I am not entirely sure, but I think NVLink works differently on those consumer grade cards that had it, compared to the professional grade cards. The other thing is on the driver level, folks would have to use the studio drivers for NVLink, as Nvidia had disabled it on the game ready drivers.

...so unlike some rumours claimed, the 4090 will not have 48 GB of VRAM. Where it makes up is the insane core counts and floating point operations. Nice to see a 3080 16 GB (finally) though they "throttled down" the core counts form the 3080 Ti as well as the core counts for the "base" 12 GB 4080 from the 10 GB 3080.

On Nvidia's site they list the 4090 at 1,679€ which is about 1665 USD at the current exchange rate (which in turn is about 1,700 USD more than the MSRP for the 3090).

So if you need more VRAM resources (since the 4090 is no longer NVLink compatible) for those "epic level" scenes, the Pro line will still be an option (will be interesting to see what the A6000's successor will be capable of)

Those rumors were mostly in regards to a potential Lovelace TITAN or 4090 Ti. In any event, I'm not jumping on the 4090 bandwagon because A) I just bought a 3090 Ti and B) I'm much more of a "TITAN Black" kinda guy (full fat buyer).

You could look at it that way, or OH BOY I GET TO BUILD A NEW SYSTEM!!!!! Most can't run out and do that. There are those who can.

Most can't run out and do that. There are those who can.

I'm waiting for Gamers Nexus and Jayztwocents to get their grubby hands on them first before I decide if I'm going to splurge on a 4090 or pick up a cheap-ish 3090Ti. I'm just rocking a 3070 now, and could use a VRAM boost. Sucks only being able to get 1 or 2 models and a bit of scenery in a render before it decides to cap-out the memory.

Hmm am I the only one noticing that the 4090 "only" has 6000 more cuda cores? Actual speed of render would be 1.5x at best compared to a 3090 - with the added power consumption, space (heat in he system) and price... If you already have a 3090, getting a second 3090 would be the best bang for your buck..

Cuda cores are the only measurement for render speed?